Your cart is currently empty!

Instrument Mimics: the birth of sampling

Musical instruments are mimicked throughout the natural worl: from the prodigious talents of songbirds, the singing sand dunes of the Sahara, or the instrumental mimicry of beatboxers and vocalists, the sounds of musical instruments have popped up in all sorts of unusual places.

If, however, you aren’t interested in evolutionary reproduction, the physics of fluid dynamics or … let’s say evolutionary reproduction, why would you want to replicate the sound of an already existing instrument in a new form? There are a range of technologies, inventors, and ideas that show us how the sounds of traditional musical instruments can be repackaged and reworked into new forms and for new purposes.

Hundreds of different technologies have been used to recreate the sound of other instruments, each targeting is a different musical goal, budgetary constraint or engineering problem, such as different playability (or learning curve), increased portability, cost savings, more robustness or even just a change of materials due to other materials being difficult to supply, source, or use. The key choice that needs to be made when adapting an instrument into a new form is one of fidelity: is the goal to recreate the original, or to push boundaries and create something new?

The most established method of instrument recreation for the last few hundred years has been sampling. This is a process whereby you capture an existing sound and reform into something new. Its older cousin synthesis is creating sounds from basic components by combining or subtracting pure tones and creating a sound which perhaps references an existing sound but is built in a completely different way. This is most often done now with circuitry, but has an analogue in the world of pipe-organs and accordions, recreating tones using the shaping of harmonic overtones, and easily the subject of it’s own article. The most common methods for sampling used today involve capturing the real sound of an instrument being played (or singers singing) and chopping the recording down into individual notes that can be recombined on the fly into new musical melodies and harmonies as needed.

There are many reasons to sample real instruments or to explore other methods of mimicking the sounds of existing instruments. They are often used where the musical performance is not the focus of the art but instead a component of a larger cultural experience or output: movies, the theatre, background music in shopping malls and airports, and studios with higher production values and ambitions than budgets or resources. Often these instruments, originally created to replace real musicians or even entire ensembles, have found their longevity and strengths in becoming entirely new categories of instrument with their own roles, performance specialists, sonic qualities, and history.

The mechanical age

Instrument makers have always used the sounds of existing instruments as inspiration for their new creations. Harpsichord makers added keyboards to what was essentially a hammer dulcimer to create new instruments with increased playability and versatility but with the percussive timbre of the existing instruments. Adolphe Sax recreated the clarinet in yellow brass in order to make an instrument that had similar tonal qualities to the clarinet but was waterproof and could be marched with in the Parisian rain.

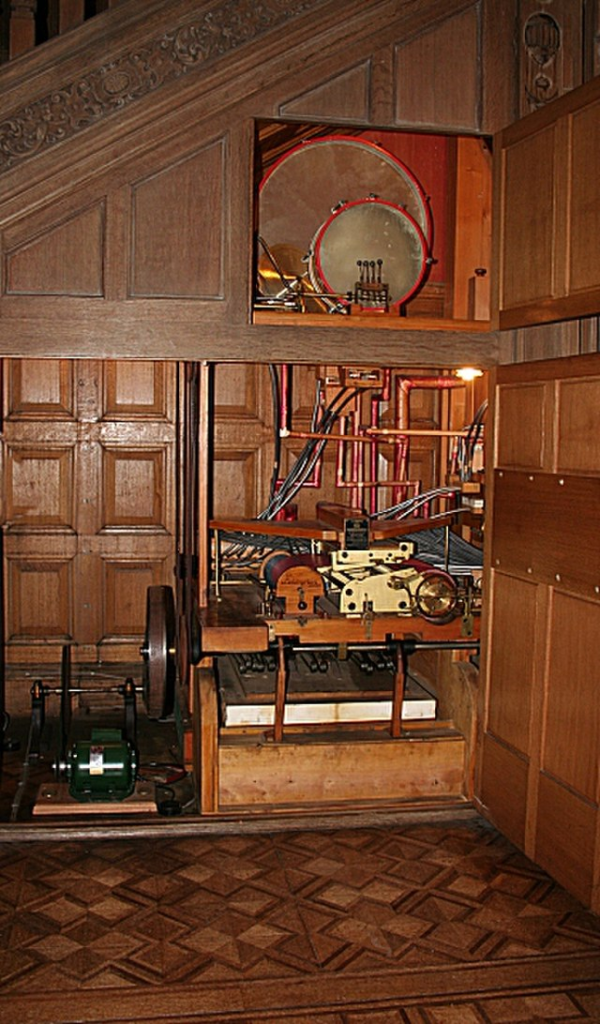

The 19th century was where these ideas took off, evidenced by the popularity of the player piano (an ordinary piano with the addition of a mechanism that could play from rolls of punched card, enabling drawing rooms across the empire to create beautiful music with only the skills of a tricyclist) and the family of instruments we refer to as orchestrion. These mechanical beasts essentially repackaged existing instruments into new portable and automated forms. They used actual violins, flutes, drums and pianos rather than recreating their sounds through other technologies, but automated to make instruments that were entirely new and play music not playable by human hands.

This mechanical approach was taken to its apotheosis in the old early 20th century cinema organs which were able to recreate horse hooves, thunderclaps, gunshots, foley and a whole range of different sounds that would be impractical to do inside a building without burning it down or having a small army unit on standby. Anyone who has ever heard a fairground organ in full force knows the versatility of these inventors in incorporating existing instruments and noisemakers into their calliopes.

While these mechanical beasts share a common tone quality and philosophy to modern sampling (the insistent sawing of the roboticized violin in a 1890s orchestrion has more than a passing similarity to the casio keyboard string sound many of us played with in the 1990s), these instruments are more the domain of early recording technology as a modern performance technology. They were a way of capturing a performance and recreating it for audiences outside the concert hall and without the need to hire a real musician, an early parallel to physical media and streaming.

Capturing these sounds and replaying them on demand has become both an art and a craft over the last two centuries, developing in parallel to the concept of synthesis. Synthesisers create their own sounds using discrete components and have really become their own category of instruments and sounds and an integral part of the electronic music. However, a lot of early synthesizers were trying to recreate existing sounds.

It can be helpful to think of the church organ as a true analogue synthesiser, combining pipes of different frequencies and tone colours to create new sounds. These instruments might not have had velocity control on their keyboards (and only have on/off ivory stops rather than twiddly buttons) but they were able to recreate the sounds of other instruments such as flutes, oboes, and brass and as they they developed organ builders created experimental and extravagant stops which would mimic trumpets or voices or strings as we chart this development into the theatre organs of the 20th century. These instruments were all able to be played live and play music that had never been played before, so they became instruments in their own right rather than a performance capture, and musicians started to specialise in these instruments and develop the repertoire alongside the development of the instruments.

The Electronic Age

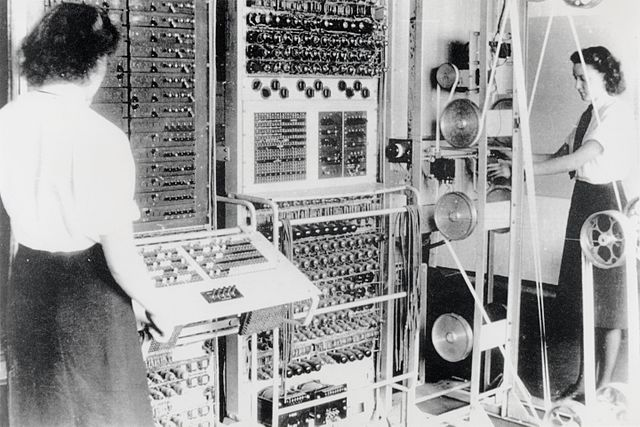

After the second world war engineers and inventors were excited to try out new technologies that were developed for war: Magnetic tape was invented in Germany in 1928 and was championed and developed by the Nazi state (Hitler liked it as it meant he could do ‘live’ radio broadcasts from cities he was not physically present in, so confusing the Allied bombers), meanwhile the US and UK had made great advances in electro-mechanical computing, valve amplification, and transistors (patented in 1947). These technologies allowed the manipulation and amplification of sound in ways previously unimagined. Gramophones and radio stations no longer had to deliver their entire performances ‘as live’, and recording engineers were able to use effects or new sounds with huge rooms full of noisy equipment. Artistic developments of the era included conceptual ideas such as musique concrète (pieces made by stitching together sections of magnetic tape, or playing with other forms of sound generation), spectralism (using the analysis of audio to generate acoustic performance works), and the serial and experimental works of Stockhausen, Cage, and Boulez.

During this exciting time what could be considered the first electronic sampler was developed. The Chamberlin organ was developed in Wisconsin in the 50’s by Harry Chamberlin, and used short strips of magnetic tape with recordings of members of the Laurence Welk Orchestra, made from a converted studio/cupboard in the inventor’s house. A Birmingham tape engineering company, Bradmatic, saw this idea and developed it further, improving the reliability, recording quality, and selectable range of sounds. This new instrument, the Mellotron, was released in the early sixties and became a huge success, partly due to the quality of the instrument, and partly due to the celebrity endorsements at it’s release (Eric Robinson, a well-known BBC bandleader was an early investor and helped make the new recordings).

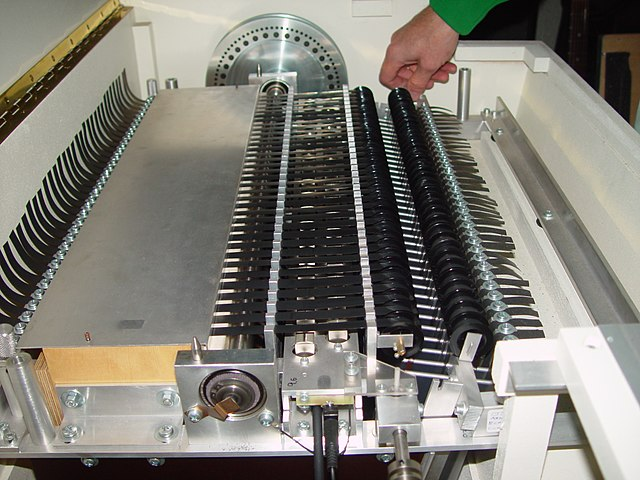

The mellotron’s mechanism was complex but surprisingly clever. Behind each key of it’s main keyboard was a length of magnetic tape (holding 7-8 seconds of pre-recorded audio) and a motorised playback head (like those found in a cassette player). When a key was pressed the tape would be pushed against the head, and the head would activate, pulling the tape through and picking up whatever signals were on it. A clever, if seemingly primitive, mechanism involving lead counterweights would ensure consistent tension and the safe return of the tape to its starting point when the key was released. The sound from all of these tape heads was combined and amplified, and sent off to a speaker.

Despite some tonal quirks (the tape would often flutter, the motor would jam if you played too many notes at the same time, causing the tape to slow and lose pitch; some of the samples included accidental noises like chairs being scraped on the floor; tapes could degrade under hot lighting or around smoke) the Mellotron was a huge success and found it’s way from Variety/Jazz onto major pop records: The Beatles used it in the famous intro of ‘Strawberry Fields Forever’, Rick Wakeman played it on David Bowie’s ‘Space Oddity’, and 40 years after it’s invention (and long after the development of digital sampling) it was used on Oasis’ ‘Wonderwall’ and Radiohead’s ‘OK Computer’. The instrument was particularly valued for it’s warm lush tones and personality. Originally intended as a cheaper substitute for an orchestral section it became an instrumental choice used to bring lushness and warmth to a recording.

Hardware Samplers

With improvements in consumer electronics, digital computing, and audio quality, the 80’s heralded a new era of sampling hardware. Many synthesisers and new instrument technologies found their feet during this era, but the addition of fast computer memory and MIDI protocols enabled hardware samplers to come into their own.

The Fairlight Computer Musical Instrument was invented in Sydney, Australia in the late 70’s. Set up as a home business by Peter Vogel and Kim Ryrie, their experiments in digital synthesis led to them coining the term sampling as they realised that playing a short audio file at different speeds (and thus changing the pitch) resulted in a more realistic sound than synthesis had so far managed. Despite a limited memory, resulting in maximum audio limits of up to a second, and a price that put it out of the reach of all but the richest studios, the Fairlight became one of the most influential instruments of the 80s and 90s, being used by artists across the charts and breaking the ground for a raft of new digital samplers and synthesisers.

The key feature of the Fairlight was the versatility with which you could manipulate the audio samples. While the digital nature of the synthesis systems in the Fairlight resulted in an overly sterile sound, the speed and flexibility of it’s sampling engine was revolutionary. Whole tracks could be remade using it’s existing samples, or quickly played or sung into the system, and layered together and processed using cutting edge technology.

Kate Bush used the Fairlight extensively (actually she used the unit owned by Peter Gabriel, who had the first one in the UK), notably on Running Up That Hill , where ‘cello2’ was the source of the main sounds of Running Up That Hill, and a glass smash and insect sounds used on Babooshka. It was also used on Aha’s Take on Me, and Duran Duran’s Rio.

Aside from it’s ubiquity at the top of the 80s charts, the Fairlight was a major influence on musical technology, and approaches to sampling, in two unconnected ways. First, the qualities of the instrument were paired with an exorbitant price tag: similarly priced to a small house, many musicians talked of having to choose between home ownership and fairlight ownership. The biggest acts of the eighties, and their high end studios, owned them (and would sometimes let other bands come use them when available), but the technology was out of reach for the rest of the industry. This resulted in a stream of new hardware digital samplers entering the market in the 80s and 90s, with various reduced feature sets coupled with robustness, portability, and affordability. The E-mu (officially known as the emulator) went without the computer monitor, qwerty keyboard and external processing unit, manufacturing a piano keyboard that could trigger simple samples with basic processing and mapping that had to be adjusted by ear (with no display). Despite these severe musical limitations it was a quarter of the price, and so was a huge hit, rapidly followed into the market by other units from Korg, Akai, and Yamaha and many others.

This budget boom led to other samplers in different forms: notably the drum machine and drum pads. Rather than a piano keyboard these would have a set of 4-12 black rubber velocity-sensitive pads, designed to be hit with sticks rather than played by fingers, and allowed the musician to load individual drum sounds onto each pad, combining the processed electronic sounds of the drum machines and hammond organs of the 60s-80s with the playability and flexibility of a modern sampler. It could also be thrown in a backpack, so drummers didn’t even need to own a car, let alone a drum kit.

The other major influence of the Fairlight was in the sounds it came bundled with. Loaded in from a series of floppy discs, it’s banks of pre-processed sounds made their way onto many hits of the 80s and were mimicked and picked up by other artists and samplers. Most entertainingly idiosyncratic in this category was ORCH5: better known as the orchestra hit. This too was a last minute addition to the machine, the inventors dubbing a single note off a recording of Stravinsky’s great orchestral work The Firebird. This full orchestral stab (every instrument orchestrated across the same note in octaves, along with bass drum and timpani giving it some extra oomph) found itself sped up, slowed down, filtered and processed onto all sort of records, from big pop hits and funk tracks to where it really found it’s home: R&B and Hip Hop.

Hip Hop had been having its own relationship with sampling during the second half of the 20th Century, using the analogue technology of turntabling. While not sampling in the sense it’s been used above, it brought the musical idea into the mainstream. Using a pair of record turntables DJs would dub short sections of existing music and loop it, using the drum beat from one track and the horns or guitars from another, building layers out of looping samples and whole tracks out of pre-existing music that would then be rapped, played, or sung over. These are samples of existing music, rather than individual notes, so were not played like a keyboard (instead played on the turntables), but were processed and manipulated in many of the same ways as the more granular samples we have already looked at. Like the cinema organ they could be ‘played’ live, and gave musicians a new palette to work from that didn’t require the hiring and recording of a whole band.

A famous example of this kind of sampling is the Amen Break, which for film nerds can be thought of as the Wilhelm Scream of recorded music. This was a four bar drum fill from the 1969 soul track “Amen, Brother” by The Winstons, a largely forgotten band and a track that had almost no impact when it was released. It did, however, contain an otherwise unexceptional 4-bar drum passage played by Gregory Coleman (reportedly added as the track was running short). Crucially it was a clean section of recording without any other instruments on the track, meaning that it could be cleanly looped and rapped over. The speed was changed, and with that the pitch, meaning it can be found in tracks of high and low energy, and as it gathered cultural resonance, it got further divided, replicated and processed. It is, by one measure, the most sampled track in the history of music. Gregory Coleman, however, saw no royalties for any of its use, and was reportedly homeless when he died.

The Copyright implications of sampling have been somewhat underexplored, partly because the technology is so new, and partly because the lines of copyright are blurry at that close a resolution. We forget that the modern form of sampling (replaying existing music, rather than ‘capturing’ the sound of a violin) has only been round for less than half a century, and if you’re a young excited computer engineer who invents the word ‘sampling’ to describe what your new program can do, you aren’t going to think through the copyright ownership implications of replaying a single note of a classical work by Stravinsky.

Software Sampling

As computer memory and processing resources got better in the 1990s, the sampling capabilities of desktop computers really came into their own. Programs that had started as simple midi sequences or audio editors added sampling features, enabling bedroom musicians to make their own samples or load sample instruments downloaded from the internet. Two of the heavyweights in the category were created a year apart and are still going strong today: ESX24 (recently re-named Logic Pro Sampler) was released in 2001 and Native Instruments Kontakt in 2002. Both of these programs enabled incredibly detailed sampling at the highest possible audio resolution, and provided a playground for sound designers to script fully realised instruments using complex manipulation of recorded samples. The origination is often the same – a musician or ensemble in a studio surrounded by microphones, painstakingly playing every note and every technique, but the tools now enable these sounds to be split into smaller and smaller components, separately triggering the attack of one note, the sustain, the transition between two notes (including slides or runs if asked for) and the note release and room sound. The increased resources also enable this to be delivered in full multi-channel richness rather than a stereo mix, meaning a composer can create a recording that never existed for a melody that has never been played.

It’s not worth going into too much detail here as the specifics move so quickly they will be out of date before the paragraph is finished, but there is a certain unreality or hyper-reality of many of these instruments. Aside from the often pristine performances, they also allow composers to write in a way that is not practical in the real world – 16 Horns in a section playing pianissimo, an entire double string section, one playing legato the other pizzicato, double-stopping flutes – which can push into the uncanny valley of musical expression. The lushness and richness that can be achieved by layering different sample libraries and different techniques, has become a major influence on composers working with traditional orchestras, as they struggle to recreate the scores for human hands. The layering and processing of symphonic samples has resulted in ‘synth’ sounds (such as the famous Hans Zimmer bwooooooorp) that have (again) moved sampling out of the world of mimicry and into an instrument in it’s own right.

The Future

Guessing where technology goes in the future is a futile endeavour (we could theoretically go back to robot orchestras having the same function as the fairground organs of the 19th century) but the increased ability of modern computers to manipulate sounds and samples surely will provide new avenues for exploration and expression.

One of the more interesting fields to emerge in the last decade is modelling. This is a technology that starts in the same way as a sample capture – a recording session of a real instrument – but then stores the information as a set of incredibly complicated equations. This mathematical profile (rather than audio waveform) is then fed the information of the music you want to play (notes, pedals, modulation, any parameters it knows what to do with) as well as other information about the setup of the instrument (tuning, resonance, room sound) and the equations spit out audio in real time. This technology has followed the trend of the whole history of sampling – starting with organs, then pianos (Modartt’s Pianoteq has been popular in recent times for its range of pianos and pianofortes) and now into solo string and wind instruments. These instruments need very little memory, not coming with gigabytes of multichannel recordings, but instead use the processing power of the CPU and GPU to generate the instrument on the fly.

The last century has been a story of increasing power and flexibility in these instruments, allowing customisation to levels not even possible in the physical world. This has opened up opportunities for musicians, composers and performers and shaped the music that we have listened to and enjoyed, entering the public consciousness and the cultural landscape and hiding in plain sight. A sampler is just as hard to play as a wooden violin or organ, and requires a musical and technical expertise and knowledge of the specific instrument and has created whole new categories of musical occupations and enabled the work of countless musicians.